The Fall of AI: How the rest of 2023 will shape the trajectory of AI regulation

The Download_Vol 1_Issue 1_Fall 2023

What’s New:

Congress and the Biden administration want the rest of the year to be a time of learning and of laying the foundation for future action on artificial intelligence (AI). The next four months promise to be busy with briefings, hearings, executive actions, and international engagements, all with one focus – preparing the United States for the age of AI.

Why It Matters:

Now is the time for industry leaders to engage. After being surprised last November by the capabilities and popularity of Large Language Models (LLMs) like OpenAI’s ChatGPT, policymakers on both sides of the aisle are looking to industry and civil society for information and ideas on how to seize AI’s opportunities while mitigating the technology’s risks.

Key Points:

- Having secured an initial series of public commitments on the responsible development of AI from seven leading companies – Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI – the White House will spend the rest of 2023 trying to convince other governments to adopt these standards.

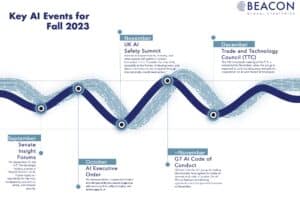

- The UK’s Global Summit on AI Safety in November, a possible G7 AI Code of Conduct, and the next meeting of the Trade and Technology Council (TTC) in December will be critical to these efforts.

- The Administration is also expected to issue an Executive Order on AI in October. While not finalized, it reportedly will task executive agencies with assessing their ability to deploy, and defend against, AI. It may also request legislative language requiring AI cloud providers to report when customers cross an undefined threshold that would indicate the development of LLMs or similar advanced AI capabilities.

- While the House is still organizing, Senate Majority Leader Chuck Schumer is asserting his influence, offering his SAFE Innovation Framework (security, accountability, foundations, and explainability) and arranging a series of “Insight Forums” for the upper chamber that will explore everything from the economic opportunities to the national security risks of generative AI and other foundation models.

- During the second half of the fall, Senate leadership will direct key committee chairs to begin translating what they’ve learned into legislation for debate.

What We’re Thinking:

- Things are kicking off, but progress will be slow. Every legislator is eager to take a bite of the AI apple. Once the Senate shifts to crafting legislation, expect committee chairs – from Commerce, Science, and Transportation’s Sen. Maria Cantwell to Armed Services’ Sen. Jack Reed – to make big, at times contradictory plays on AI. This diversity of thought offers many advantages, but it will almost certainly delay comprehensive AI regulation.

- Multilateral alignment will also take time. While the White House is eager to show progress, rallying allies and partners around American AI principles will not happen overnight. With multiple countries vying for AI leadership and AI regulation on the agendas of key multilateral forums this fall, short-term consensus is unlikely. Aligning with EU partners will be particularly difficult, with the EU AI Act characteristically opting for overregulating – mostly U.S. – tech giants in ways that even EU business leaders warn will stifle innovation and possibly benefit dangerous Chinese AI developers.

- But now is the time to engage. Once the U.S. government aligns itself around a regulatory approach, it will become less receptive to third-party input. Stakeholders should be directly engaging officials who are taking a leading role on AI and exercising thought leadership through channels likely to reach those officials, including select multilateral forums, councils, think tank events, and media outlets.

- A posture of urgent optimism will win out. Successful influencers will demonstrate their understanding that AI regulation is needed and align their proposals to the U.S. government’s concerns. This means – where sensible – advocating for principles contained in Sen. Schumer’s SAFE Innovation Framework and the White House’s voluntary commitments. But influencers will also demonstrate excitement about the immense economic and societal benefits AI will bring if the United States keeps regulation focused and fair. The more clearly you show policymakers a path to these benefits, the greater influence you will have.

- On AI and national security issues, be an informed partner. Recognize that AI is at the heart of the nation’s national security and technology policy. AI companies, therefore, should expect frequent and deliberate government engagement. Be at the table (or be on it). Make it a point to understand, and to communicate your understanding of, U.S. government security concerns in every encounter. Finally, if the government is doing something wrong, help them understand how the action hurts national security (if applicable), and offer a better way.

More about The Download

The Download is for leaders in government, industry, and civil society who operate at the intersection of national security and global business. Produced on an as needed basis, this product concisely explains important issues and places them in context with data and analysis – with the specific intent of enabling action.

More about Beacon Global Strategies

Beacon supports clients across defense and national security policy, geopolitical risk, global technology policy, and federal business development. Founded in 2013, Beacon develops and supports the execution of bespoke strategies to mitigate business risk, drive growth, and navigate an increasingly complex geopolitical environment. Through its bipartisan team and decades of experience, Beacon provides a global perspective to help clients tackle their toughest challenges.

Learn more at bgsdc.com or reach out to bgs@bgsdc.com